We should consider using this in MongoDB when starting with -repair. Also, the performance of these indexing mechanisms is evaluated. WT-3276 introduced an option log (recoversalvage) to recover corrupted log files. The model and implementation of fzMongoDB also includes an indexing mechanism that accelerates the retrieval process on fuzzy queries. It is implemented and integrated on the mongoDB server using the resources it provides. This paper describes fzMongoDB, a fuzzy database engine that provides the mongoDB database with the capacity to store documents with imprecise information and to retrieve them in a flexible way. In the case of MongoDB, there are few proposals and not very complete. For this reason, it is useful to provide fuzzy extensions to these DBMSs. ESPOO, Finland, J- ( BUSINESS WIRE )-Tecnotree, a Finland-based global provider of digital transformation solutions for Digital Service Providers (DSPs), today announced that it has. On the other hand, information sources for Big Data algorithms can contain imprecise information, and the way to obtain, aggregate and present results can have an imprecise nature as well. We are using zlib on both journal files and data files, but we did see the same issue with snappy.

I am trying to find out how can I do cleanup the corresponding journal entries so. Lets consider the following examples that demonstrates the mapreduce command. MongoDB mapreduce command is provided to accomplish this task. Map Reduce is a data processing technique that condenses large volumes of data into aggregated results. deleting a user message from a Messages collection. MongoDB Map Reduce example using Mongo Shell and Java Driver. criteria: field specifies the selection criteria entered by the user.

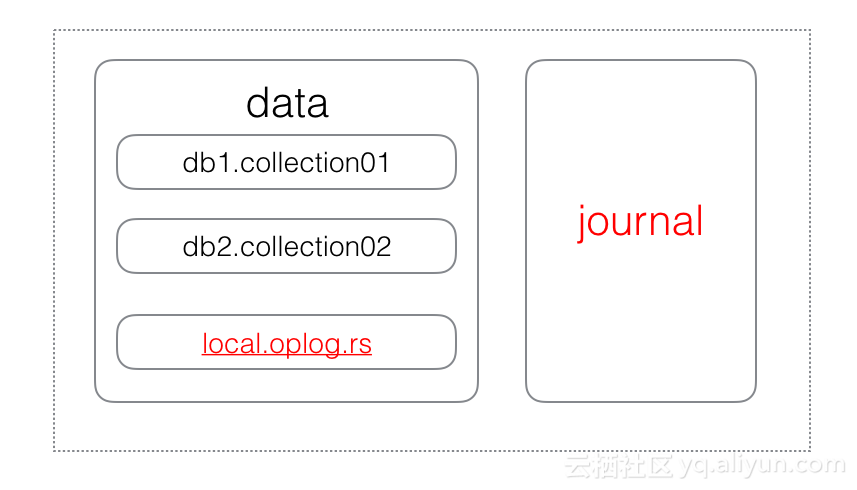

The syntax for mongodb find () method is as follows db.collection.find (The files are on zfs, with atime disabled. I want to delete any trace of a particular database item e.g. MongoDB find method fetches the document in a collection and returns the cursor for the documents matching the criteria requested by the user. We are using MongoDB 3.2 on FreeBSD 10.1. For this last use, due to their scalability, the NoSQL databases, like mongoDB, a DBMS oriented to documents, have been consolidated as a powerful tool for the storage and processing of large volumes of data. From my understanding of the documentation the journal files would be flushed frequently, not left on the disk indefinitely.

The sources of these data can be varied, from data streams that will be processed in real time, to the exploitation of transactional data stored in databases. Once the file exceeds that limit, WiredTiger creates a new journal file. Big Data are a paradigm through which valuable information is achieved through the analysis of a large amount of data. WiredTiger journal files for MongoDB have a maximum size limit of approximately 100 MB.

0 kommentar(er)

0 kommentar(er)